Search marketing relies on the use of data collected by bots which allow the search engines to rank websites and individual pages. We all knew that, but perhaps what we didn’t know, was that well over half of all website traffic is generated by these bots.

It has been suggested that over 60 per cent of all website traffic is actually not human, but involves bots.

Which means that you’re biggest visitor on your website is likely a bot and not a user.

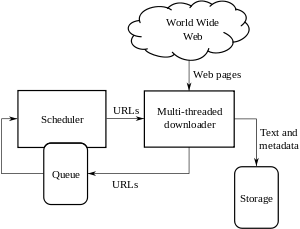

The search engines use bots (automated software tools) to crawl through sites and collect data (mostly from the meta tags and the onsite content) and then, according to an algorithm, make certain conclusions (why such a site should rank above another for example).

The use of bots has risen by over 20 per cent in the last year and this is likely to increase further.

Not all bots are good bots though and some have malicious intent. They are there to steal data, spread scam email, or post ads. But, the latest figures do suggest that most bots in use have good intentions, search as search data.

An associate director at Oxford University’s Cyber Centre, Dr Ian Brown, told the BBC that he considered the latest figures were helpful, because it showed how traffic was growing and in a non-human manner: “Their own customers may or may not be representative of the wider web. There will also be some unavoidable fuzziness in their data, given that they are trying to measure malicious website visits where by definition the visitors are trying to disguise their origin.”

The use of good bots has risen by over 50 per cent the figures showed.

Clickmate monitors the use of bots throughout its sites.